Web Crawlers

Web crawlers are computer programs that traverse the World Wide Web, harvesting information from pages per a set of rules. These programs are also called “web robots” or “bots.” They can be helpful for many purposes, such as gathering information about particular topics, monitoring website traffic, or even checking links on an individual webpage.

Web crawlers are like the individuals who organize a disorganized library’s books into a card catalog so those visiting the library can quickly and easily find what they need.

The term “spiders” is used for bots because they can crawl over the web, just as spiders in real life.

How Do Site Crawlers Work?

In principle, a crawler is like a programmed librarian. It looks for information on the web, which it assigns to specific categories, and then indexes and catalogs it so that the crawled information is retrievable and evaluated.

The operations of these computer programs need to be established before a crawl is initiated. A set of instructions and their order are set up in advance. The crawler then executes these instructions automatically. The crawler creates an index of the results, which is accessible via output software.

A crawler will gather information from the web according to the instructions it receives.

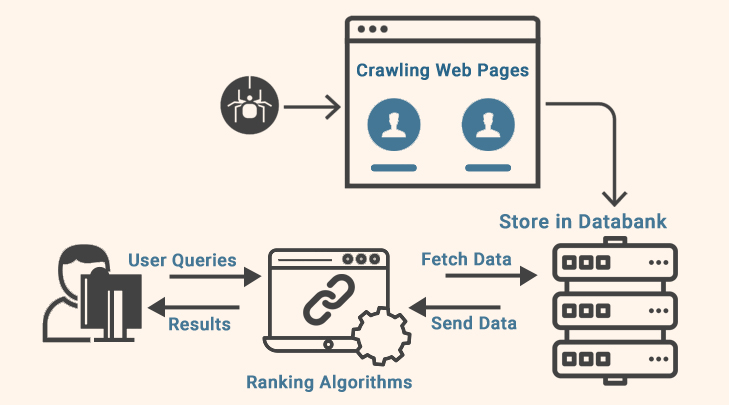

An example of how crawlers reveal link relationships is shown in this graphic: